|

Roger T. Dean (bio)

University of Canberra

Introduction Noise is silent, since undifferentiated. Can we sculpt information out of silence? And from the body? Speech is not silent; so it cannot be noise. Noise cannot be commodified, since it is unproductive and uncommunicative. But does noise evolve as we listen to it? As we tune it? As we direct it in space? Does music emerge in the sound? Can we hybridise Noise and Speech? Noise is not only the confusion of communication, the error of measurement, the hiding of signals. It is the macrocosm of all soundscapes. Voicescapes are a simultaneous speaking of the sounds of the world and the brain. So the voice must sometimes speak noise. Noise is sound in which all or a wide span of frequencies of oscillation are contained in fairly similar abundance be it pink or white. As Roads indicated (Roads, 1996: 'colored noise.. is a noise band centered around the ... frequency of the oscillator' p.337). Speech is not only the sounding of language, but also the hollowing of the frequency spectra of noise, to highlight formants, peaks in the abundance (energy) spectrum of the sound with respect to frequency. I will discuss how to compose and harness features of speech to entrain expressive noise: NoiseSpeech. And how its meanings still risk subordination or suborning in the power structures of culture, but are not profitable, nor capital-productive. Counterintuitively, in his book 'Noise', Jacques Attali speaks mainly of music, 'the organization of noise' (Attali, 1985: 4). But music is, rather, the organization of sound. Noise is both undifferentiated sound, and yet a specialised aspect of sound, a constructor of sonic plausibility, and an ambivalent component of recording(s). Noise is also a restriction on the transmission of thought, or on 'channelization', the term Attali sometimes conflates with the operation of information networks as well as their data flows. Noise is an important genre in contemporary sound art in which music is tunnelled out of noise-like sounds mainly by digital filtering and transformation processes; NoiseSpeech is proposed as a new genre within noise music. Attali probably did not anticipate that there would be such a genre as noise music. I argue that he would have happily placed it amongst the small number of musical styles (including free jazz and punk of the 1960s-1970s) he recognises as capable of evading commodification and groups optimistically under his forward looking use of the term 'Composition'.

Noise separates us from our perception of the stimulus, it immunises us. It cocoons; we cease to be apprehensive. We are no longer the target. Noise trembles; we relax. It distracts us from the focus of sleep: from visual sonic synaesthetic torpor. Can a bird sing with a clarinet? Or does the bird merely dissemble, separated from a teleological moment by an undeciphered, almost amorphous sonic outburst, which might as well have been black as pink? The European Community has begun intensive noise mapping of its cities, so far most elaborated in colorific presentations of Paris' sound waves (Butler, 2004). Presentations, or Representations? Predictions? Noise enunciates the world. Music announces a world of ideas. The abstraction of computer models of sound distribution belies the capacity for annunciative disturbance. The inner courtyards of Paris breathe their own air, relatively free from the pressures of sonic decibels. But the inner courtyards of the sleeper or the writer, apartment bound, do not always escape the waves of stressful pressure. The inner courtyards of the cell, or the ear, compartment bound, do not always escape the waves of stressful pressure. But noise confirms and supports life.

Noise as stimulation The arousal of noise, which is itself life. Perception leads to cognition, leads to identification, personification, organization; maintains the organism. Why are we lost outside the city? Is it just confidence? Or is it lack of neural firing, input stimulation, however heterogeneous? The calm of noise. The Art of Noise. Perhaps we cannot process, analyse, comprehend, if we lack basal stimulus, muscle tone, brain tone, ear tone(s), speech-tone(s)... An important issue in the study of both communication and cognition: does a largely invariant and undifferentiated noise background aid or impede communication? There is little doubt that such a flow consumes neural power, even though that consumption is gradually attenuated as the background becomes increasingly familiar, and increasingly gives up its hidden secrets of specificity, its less than obvious differentiations. But might the noise flow also ensure a level of attention that facilitates the acquisition of other stimuli and messages? Kagan's children watching flower stickers on loudspeakers in order to be persuaded to listen to and hear their consonant sounds (Zentner & Kagan, 1996); the noisy roar of the football crowd, the poise and excitement of the players ... This question may have particular importance in auditory display of time-varying data sets: the auditor may require a basal level of attention to hear the subtle variants within a mass of sound, purporting to make the data (or its derivatives) accessible. This may require noise.

Thunder, lightning, fire, earthquake, tremor, street, body these all entrain noise. Does noise represent, sound signify, music narrate and direct? What are the signifiers? Are the entrainments entamed by the body politic? How does a professional access quickly the information in a mass of data representing seismic exploration of a tract of the earth's surface? Or the catastrophic real-time data of regulated international financial transactions? Or the life-threatening data concerning ongoing impacts of environment on aeroplanes? There is modest evidence that sonic representation of these data (known as sonification) may be more rapidly and sometimes more fully comprehended than visual representation (Kramer, 1994). Further interrogation of the nature and utility of sonic representations is necessary, but the opportunity to tame sound into submissive information channels is upon us. The differentiation of noise in space and frequency may provide rapid access to remote data and their immediate or futuristic implications. In agreement with Attali, the only product(ion) in this process which might turn out to be non-commodifiable will be the sonic display technology, not the composer, nor the performer, nor the source information.

Imagining noise, entering noise, penetrating noise, exiting from it. What do we hear? Noise is hardly ever constant, unless in a recording studio. How does a ripple come to the surface, are there stones? What consumes noise: do the streets, buildings, the bricks, respond? What digests noise: do the skin, face, feet, and ears, respond? Do they transform, are they transformed? Or does the world evolve every noise, emerging itself renewed and changed? Who or what composes? The noise artist faces the computer and the space, and perhaps, happily, some others' bodies also. Noise is not often the substance of monetary gain; the composer is no longer even a domestic employee (Attali, 1985: 15-18). The artist is the sonic environment, perceived by whoever wishes to perceive, accessed further by the few. What is the noise composer-performer-improviser doing? At an operational level, usually driving and controlling a computational algorithm which generates and processes sound, sometimes in a cumulative manner, though often quite monodically (generating only one stream of sound). The sound pressure is frequently high, the frequencies ultra low and ultra high, the tympanum stretched. Ear plugs are commonly available. Filtering even before perception! What algorithms are used? Sound generation code, sound filtering code, sound file recording code, sound file re-presentation code. In the latter, the recently recorded (or previously stored) sound file is re-played, misplayed, misplaced, represented. Its internal structure (and usually its order) is changed abruptly and non-linearly, before sounding. It may be merged, mixed, challenged, transformed, through the impact of another recorded sound file, or incoming sound stream, or even incoming data indicating features of the environment. What is the temperature? Are people moving how often how fast are they also making noise is it speech is it applause is it undifferentiated response what is needed to entrain their presence if not attention and what will maintain the social ecology of the situation long enough for the composer-performer to complete the process they choose or discover? Again, what algorithms are used? Regardless of programming platform (for example MAX/MSP, Reason, GRM Tools, AudioMulch, Supercollider, and on Mac, PC or Linux), a common strategy is that of looping (repetition). But the loops are coopted into transformation, rather than becoming subordinated into Attali's monotony of repetition. Most recently, polyphony of process and spatialisation of the resultant strands have become important, in part because commercially accessible to the contemporary jongleur who has become a capital-free sound-artist. What discovery? The sound artist can focus on the gradual transformation of a sound world which was already familiar territory, finding and temporarily fixing on new surfaces, new interfaces (as for example with our LowHz performing format, recording of Ng and Dean on cd-r with (Dean, 2003)). Or they may start avowedly with nothing, and generate the first sounds at leisure as a result of environmental inputs, catching it for further enlargement. Starting with noise as a major if not sole component of the sound field is a common ploy. The composer-performer can then dig, with a large spade, the crude algorithms they have constructed, until some stone takes their fancy, differentiating itself from the smaller earth particles. Or they can recombine the noise with other sound sources, previously available to them or newly captured, to see whether the interaction is productive. What do they seek? They often 'Set Sail for the Sun' (as in the improvised 'intuitive' piece by Karlheinz Stockhausen of that name), beach tunnel ground head and perhaps only they notice when it arrives and that they can transiently emboss it on the sound space. Or they may use the iterative cycle of process-choose-process-choose, in the hope that something is emergent and it always is (Dean, 2003; H. Smith & Dean, 1997). For the artist, a new disorder is imposed on the starting disorder, or a new order emerges from it. Computational processes such as evolutionary and genetic algorithms, neural nets, and a-life (artificial life, (Whitelaw, 2004)) share with the sound discoverer the process of selection pressure: no sound is innocent, no changes are neutral, and some are preferred and thrive, while others are undesired and shrivel. The problem, as in a-life visual art, is the inefficiency of a purely computational evolutionary process, presumably due to the narrow range of selection pressures which can currently be brought to bear within the computer, in comparison with the density and diversity (unpredictability) of selection pressure (and the long time frame) of 'real' biology. The composer-performer can be much more brutal with the bruits, so that direction is achieved, and is perceptible to the auditors. The human biological process supervenes over the computational noise emerges as selected sound as sonification of the input pressures as sonification of the composer-performer. Composition in Attali's sense, has been achieved by improvisation. Information has appeared within the noise, and the background stimulation may aid its perception and cognition.

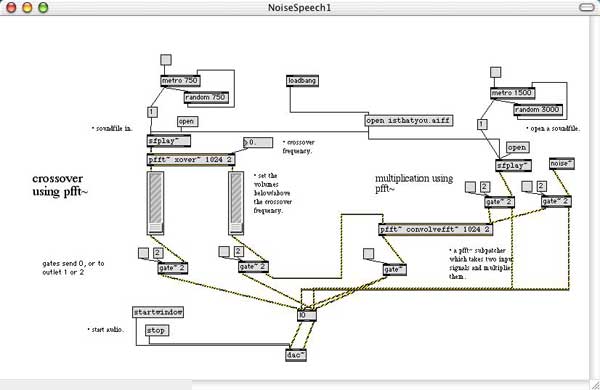

She spoke, but he heard only noise, just vaguely aware that she was talking. How did he know at all? Did she hope to conquer noise? Was she unaware her speech was not linguistically intelligible? Noise overcame the communication line of her speech; but speech remained detectable. What was the role of the computer? Later, the computer spoke; it was not the virtual electronic voice of 'Victoria', but noise. Noise spoke: in NoiseSpeech. If one accepts that noise may be useful or even necessary for the successful impact of sounds (and 'noise' prefaces the attack of many classical instrumental sounds), then . One possibility is to focus on noise itself, as substance or substrate. But another is to hybridise noise with other more differentiated, even explicit, sounds. We have developed techniques for establishing differentials within sounds which give the impression, rightly or wrongly, that they are derived from speech. Since one can recognise such derivations, or apparent derivations, it is likely that this cognitive step influences the appreciation of the sounds, and perhaps even their ultimate affects. If so, NoiseSpeech would be one of the latest examples of Composition, in Attali's sense; earlier examples being post-minimal music, free jazz, which he discussed, and the broader field of expressive noise itself. NoiseSpeech is a neologism, Google providing at the time of writing only 4 returns to this search term (with or without capitals), three being to our work, and one providing the third (commercial) quotation at the head of this article. In contrast, searching for 'noise speech' (two separate words), provides more than 890,000 returns, mostly concerning the two separate phenomena. Speechnoise returns only one hit, the interesting Joglars website (www.cla.umn.edu/joglars), on which one can find Radio Caterpillar, and (for example) EUY (1998) a 'Babelian glossapoetic electromagnetic'. This concerns hybridising speech sounds with other sounds, rather than what I focus on in this article. ISI's Web of Knowledge (since 1992, and including external collections, returns nothing for either term or 'concept'). Wolfe has compared the acoustic features of music and speech signals, arguing that they show 'complementary coding' (Wolfe, 2002, 2003). For example, the pitch and rhythmic components of music are categorised, notated and open to precision; those of speech are not, and variability is common, rather than precision. Conversely, plosive and sustained phonemes are implicitly or explicitly notated and may be categorised, whereas articulation and components or instrumental timbre are not. Plosives constitute varying formants, which are not widely used in music; consonants involve categorised timbre components (which are hence notated), whereas it is not common for transient spectral details of timbre to be categorised or notated in instrumental music. Some of these distinctions are more tenuous in computer music, a fact which gives encouragement to the Compositional and cultural endeavour we are discussing here. Evidence from cognitive psychology is limited in addressing the question : 'what kinds of sound seem like speech' while not necessarily being overtly verbal? The classic paper is that of Remez ((Remez, 1981), reviewed (Remez, Rubin, Berns, Pardo, & Lang, 1994)) which shows that three formants are needed for a signal to be recognized as speech like. Using sine wave 'speech', it was shown that the formants need to be within the frequency range of 250-3000Hz, and that instructions to participants could enhance identification of the speech-like quality. This has been argued as evidence of the engagement of a unique 'speech mode' of cognition. It remains under discussion whether speech or vocalisation is 'privileged' in this way; brain magnetic resonance imaging does not reveal areas specifically engaged by speech (e.g. (Joanisse & Gati, 2003)). A considerable body of work has been expended on both speech synthesis and speech recognition, which can be described only briefly. For example, a suite of software for speech synthesis has been developed at IRCAM, Paris, and phase vocoders are commonly used as voice modifiers (analogue and digital). One influence on such work has been the modelling of the vocal tract as a series of filters, taking account of the complex vocal techniques of singers such as Tuvans; it may be important that larger numbers of filters are used in speech synthesis, whose frequency spacing relates to that of the cochlear membrane of the ear. Miranda and others have evolved software for extracting prosodic components from speech, and these have also been used as compositional bases (Miranda, 1998, 2001, 2004). Others have studied speech/noise chimera (like the hybrids discussed above), in which both components retained integrity, but the impact of each is affected by the other. Conversely, automated speech recognition (within sound files) by computers, depends on a large number of features: for example an effective method using around 15 features of the sound signal has been described (Scheirer & Slaney, 1997). The concept of NoiseSpeech which we have described above is distinct from any of these approaches. The cultural capital developed in NoiseSpeech has so far resisted extensive commodification. I will summarise some of the technologies which have allowed us to generate NoiseSpeech in real-time improvisation, and hence also as pre-recorded material and composition. In the beginning, she spoke, but he heard only noise, the noise of talking. Such conversion of speech into noise can be achieved when a speaker is detected by a microphone, whose signal is transmitted to another compartment, another physical space, and rather than being simply sounded, is taken into a digital instrument, triggering or generating a complex sound whose envelope is a direct reflection of the speech, but which is not talk. During a continuous speech, the processed sound quite rapidly takes on the impression of a narration, and hence of speech. More generally and flexibly, we have found there are several categories of mechanism for the production of NoiseSpeech. One may commence with a recording of speech. This can then be processed (live or in a recording context), so that phonemes or subphonemes remain detectable, but no words are. However, this is no closer to undifferentiated noise than is a recording of a composed language, known only to the speaker. Further processing can remove completely the detectable phoneme components, yet leave formants, and envelope cues, which suggest speech. Conversely, one may commence with a recorded sound file or live sound stream which has nothing to do with speech, or commence even with a noise stream. Several approaches seem to provide NoiseSpeech from such materials. Cross-over high pass filtering of speech sounds can remove the detectable words, yet leave the impression of speech (compare filters at 1, 3, 5, and 7kHz, where once 3kHz is reached the speech is no longer intelligible as words, but remains speech-like). The importance of high frequency components of speech sounds is also indicated by cognitive studies which show that they assist the for location of individual speech streams amongst many (as is needed when one listens to an individual in the midst of a mass of others in a social environment). There are thus two different functions of the high frequency components of speech: to convey a speechlike impression regardless of whether there are detectable words, and to assist location of individual speaking voices, and hence the identification of the words uttered. Convolving speech or filtered speech with noise can also create the impression that the resulting sound derives from speech, even when words are absent. Phase Vocoding, with appropriate parameters of time stretching and frequency/partial distribution, is also successful, as one would expect from its common use in muzak and other sound worlds, and hence its familiarity and association with speech and vocalisation. Similarly, frequency filtering so as to superimpose specific speech formants is powerful, achieving apparent success even with pure noise sources (no speech sound component included). Formants to be used may be identified from vocal sounds, by spectral analysis (for example using the software Audiosculpt, or the fiddle~ object in MSP). It is interesting that formants vary very much between different speakers, genders, and between vowels and consonants, between speech and vocalisation: different formant structures give different speech (or vocal) impressions. A Fast Fourier Transform followed by its applying its inverse for resynthesis using the same or a different sound source can be an effective way of achieving such formant superimposition. Envelope transformation is a third. Some very simple MSP sound performance patches we have written which are basic implementations of these processes are shown in Fig. 1 and 2 (click here to listen to Speak NoiseSpeech as a .wav sound file). In performance, we use more elaborate versions (my ensemble austraLYSIS, and particularly its format LowHz, have been active in the noise genre since 2000 (Dean, 2003), and developing NoiseSpeech since 2003; see also www.australysis.com). Fig.1. NoiseSpeech1. Click the image to see a larger version. An MSP (Max Signal Processing) patch for making NoiseSpeech. A speech sound file, chosen by the 'open' command, or by default that named 'isthatyou' (supplied with the MSP programming platform), is processed in either of two ways. Left Hand side: the file is sfplay~ ed, into a fast Fourier transform (pfft~) crossover filter. The frequency of crossover can be chosen by the performer, and the relative volumes of the sound components below and above that crossover can be set. The gate~ objects determine whether the output of either component (below/above) pass through the IO object to the dac (digital to audio converter) for sounding. Right Hand side: sfplay~ can be convolved with a noise~ source. Some of the information described in the patch is from the MSP tutorial documents.

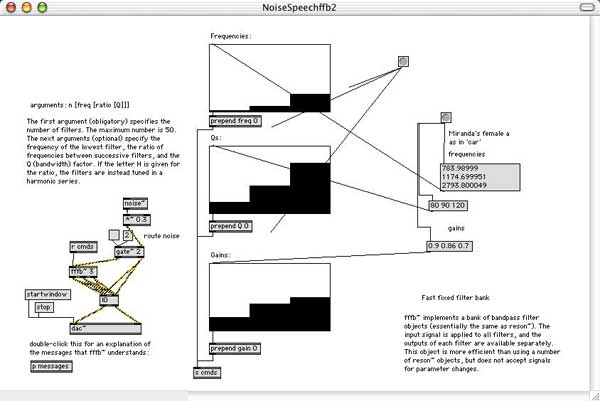

Fig. 2 NoiseSpeechffb2. Click the image to see a larger version. An MSP patch for bandpass filtering a sound source. In this case, noise is passed through an fast Fourier bank (ffb) of 3 filters, whose parameters are set by frequencies, breadths of frequency, and gains, chosen from parameters defined by Miranda for a female voice speaking the 'a' in the word car. Some of the information described in the patch is from the MSP tutorial documents. Is it plausible that Speech characteristics can emerge from complex noise (or other more differentiated sounds), with such simplicity? As mentioned, earlier evidence suggests that a 'vocal' sound can indeed be constructed by means of 3 or more formants, spread appropriately across the frequency spectrum. Our introspective experience is consistent with this, even in more naturalistic musical environments than have been tested empirically. As Wolfe argues (Wolfe, 2003) 'The ability to discern a set of harmonic frequency components as an entity, and to track simultaneous changes in that set, is an ability to discern one voice or cry from a background sound. It is also much of the ability to follow a melody.' This points to the ecological value of such capacities: for example, they permit identification of an individual organism, which may be valuable in societies before and beyond the human. Clearly, considerable cognitive research remains to be done in this respect, but the outlook is promising. This work is not without its creative context, of course. An ecological perspective on sound has emerged from the soundworks and ideas of Schafer, Truax, Westerkamp and many others, while there are major contributions to the use of digital manipulation of speech for music generation by pioneers such as Dodge and Lansky. We have also developed a technique of voicescape production for use in sound technodramas (for radio and cd), in which an 'ecological' (c.f. (Keller, 2000)) and spatial component is superimposed on voices, in controlled ways, which may be realistic or anti-realistic (H. A. Smith & Dean, 2003).

Noise spoke, it enunciated. NoiseSpeech raved of abstractions outside the terrain of spoken language. It entrained the attention of its auditors. It then announced new paths across the surface, and predicted subcultures beneath. The structure of the world was changed, as new sounds emerged and enveloped us. We could now speak also through noise. We make possible the technology. The technology does not make us. The implication that NoiseSpeech can be a functional sub-genre within improvised noise performance is exciting. The opportunities of the resultant Composition are obvious, and the possibility that they in part derive from the recognition of 'attempted' speech is also apparent. Making sense becomes occasionally replaced by making NoiseSpeech, which takes sound through noise into music, and which predicts new spectra, new improvisation, Composition. Noise sonifies Speech; the listener predicts meaning. This music has cultural control, and it is not readily subordinated into a commercial transaction, so it may retain that control for some time.

Acknowledgements: An earlier version of the ideas in sections 6 and 7 was presented at the International Conference on Music and Gesture, University of East Anglia, August 2003. The valuable advice and discussion of Dr Kate Stevens, MARCS Auditory Labs, University of Western Sydney, and Dr Anne Brewster, School of English, University of New South Wales, is much appreciated. Note: Because of restrictions with HTML coding, elisions have been made in some of the quotations from Attali, where words or phrases have been omitted.

Attali, J. (1985). Noise: The Political Economy of Music (translated by B. Massumi). Minneapolis: University of Minnesota Press. Butler, D. (2004). Sound and Vision. Nature, 427, 480-481. Cage, J. (1961). Silence. Middleton: Wesleyan University Press. Dean, R. T. (2003). Hyperimprovisation: Computer Interactive Sound Improvisation; with CD-Rom. Madison, WI: A-R Editions. Joanisse, M. F., & Gati, J. S. (2003). Overlapping neural regions for processing rapid temporal cues in speech and nonspeech signals. Neuroimage, 19(1), 64-79. Keller, D. (2000). Compositional processes from an ecological perspective. Leonardo Music Journal, 10, 55-60. Kramer, G. (Ed.). (1994). Auditory Display. Reading, MA: Addison-Wesley. Miranda, E. R. (1998). Parallel Computing for Musicians. Paper presented at the V Brazilian Symposium on Computer Music, Belo Horizonte. Miranda, E. R. (2001). Composing Music with Computers. Oxford: Focal Press. Miranda, E. R. (Artist). (2004). mother tongue Remez, R. E. (1981). Speech perception without traditional speech cues. Science, 212, 947-950. Remez, R. E., Rubin, P. E., Berns, S. M., Pardo, J. S., & Lang, J. M. (1994). On the perceptual organization of speech. Psychological Review, 101(1), 129-156. Roads, C. (1996). The Computer Music Tutorial. Cambridge, Mass.: MIT Press. Scheirer, E., & Slaney, M. (1997). Evaluation of a robust multifeature speech/music discriminator. Paper presented at the ICASSP-97, Munich, Germany. Smith, H., & Dean, R. T. (1997). Improvisation, Hypermedia and the Arts since 1945. London: Harwood Academic. Smith, H. A., & Dean, R. T. (2003). Voicescapes and Sonic Structures in the Creation of Sound Technodrama. Performance Research, 8(1), 112-123. Whitelaw, M. (2004). Metacreation: Art and Artificial Life. Cambridge, Mass.: MIT Press. Wolfe, J. (2002). Speech and music, acoustics and coding, and what music might be for. Paper presented at the International Conference on Music Perception and Cognition, Sydney. Wolfe, J. (2003). From idea to acoustics and back again: the creation and analysis of information in music. Paper presented at the 8th Asia Pacific Acoustics Conference, Melbourne. Zentner, M. R., & Kagan, J. (1996). Perception of music by infants. Nature, 383, 29.

|

|||