|

actMax

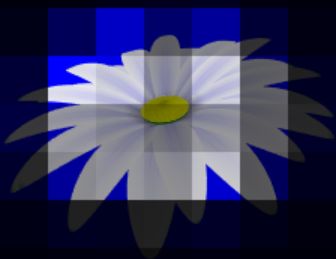

Use mouse to control 3D daisy model (mouse wheel will zoom it). The brightness of the image is proportional to exp(0.1 (Aij - max)), where Aij - activations in the "daisy" class on 7x7 grid, max - is the maximum Aij value or is equal to "actMax" (if checked). |

conv_pw_13_relu [null,7,7,1024] ____________________________________________ global_average_pooling2d_1 [null,1024]

const layer = mobilenet.getLayer('conv_pw_13_relu');

baseModel = tf.model({inputs: mobilenet.inputs, outputs: layer.output});

const layerPred = await mobilenet.getLayer('conv_preds');

const weight985 = layerPred.getWeights()[0].slice([0,0,0,985],[1,1,-1,1]);

model = tf.sequential({

layers: [

tf.layers.conv2d({

inputShape: [7,7,1024], filters: 1, kernelSize: 1,

useBias: false, weights: [weight985]

})

]

});

The baseModel returns [1,7,7,1024] feature map. Then the "head" model convolves 1024

features with the daisy class (985) weights.

async function classify() {

draw();

const predicted = tf.tidy( () => {

const image = tf.browser.fromPixels(cnv);

const normalized = image.toFloat().mul(normConst).add(inpMin);

const batched = normalized.reshape([-1, IMAGE_SIZE, IMAGE_SIZE, 3]);

const basePredict = baseModel.predict(batched);

return model.predict(basePredict);

});

const data = predicted.dataSync();

predicted.dispose();

let ma = data[0], sum = ma;

for(let i = 1; i < 49; i++ ){

let di = data[i];

sum += di;

if(ma < di) ma = di;

}

console.log("max= " + ma.toFixed(2) + ", av= " + (sum/49).toFixed(2));

let t = 0;

let heat_tex = new Uint8Array(7*7);

if(chkMax) ma = actMax;

for(let i = 0; i < 7; i++ ){

for(let j = 0; j < 7; j++, t++ )

heat_tex[t] = Math.min(255*Math.exp(0.1*(data[t] - ma)), 255);

}

We should use "exp(data[t] - ma)" value,

but picture with the "0.1" multiplier looks better.

For Object Detection one can add new layers on the top of the base model next...