WebGL2-compute GEMM shaders based on

Cedric Nugteren tutorial suit well for low-end HW but need

more tuning for different GPUs (e.g. Intel and AMD).

We can find new optimized OpenCL kernels e.g. in CLBlast library

("Note that CLBlast evolved quite a bit from the tutorials" Cedric).

Intel has many highly optimised kernels (see below). One of

them is used for the SLM_8x8_4x16 shader (I just replaced pointers

by indexes and "unroll" mad() functions not supported by GLSL 310).

Platforms (1):

[0] Intel(R) OpenCL [Selected]

Devices (1; filtered by type gpu):

[0] Intel(R) UHD Graphics 630 [Selected]

-----------------------------------------

matrix size: ( 1024x1024 ) * ( 1024x1024 )

Algorithm Peak Kernel GFlops

gemm_naive 19.144

L3_SLM_8x8_8x16 227.814

L3_SLM_8x8_4x16 250.566

L3_SLM_8x8_16x16 172.774

L3_SIMD_32x2_1x8 249.879

L3_SIMD_16x2_1x8 245.959

L3_SIMD_16x2_4x8 248.497

L3_SIMD_8x4_1x8 264.936

L3_SIMD_8x4_8x8 266.951

L3_SIMD_8x4_8x8_barrier 254.461

block_read_32x1_1x8 316.018

block_read_32x2_1x8 322.534

block_read_32x2_4x8 327.082

block_read_32x2_8x8 325.702

block_read_16x2_1x8 318.779

block_read_16x2_4x8 321.469

block_read_16x2_8x8 320.089

block_read_16x4_1x8 323.488

block_read_16x4_4x8 326.225

block_read_16x4_8x8 325.595

Optimizing Matrix Multiply for Intel Processor Graphics Architecture Gen9 by Jeffrey M. (Dec 23, 2016)

# device name: Intel(R) UHD Graphics 630 # device slm size: 65536 # device max work group size: 256 # Max compute units (GPU): 23 # Max clock freqency (GPU): 1100.000000 # Peak float perf (GPU): 404.800000 # build options: -cl-mad-enable -cl-fast-relaxed-math # matrix size: 512x512x512 # name time(ms) GFLOPS Efficiency Unoptimized 10.2 26.4 6.5 % L3_SIMD_4x8x8 0.9 293.2 72.4 % MediaBlockRW_SIMD_2x32 0.9 303.0 74.9 % MediaBlockRead_SIMD_1x16_2_fp16 0.4 596.7 147.4 %

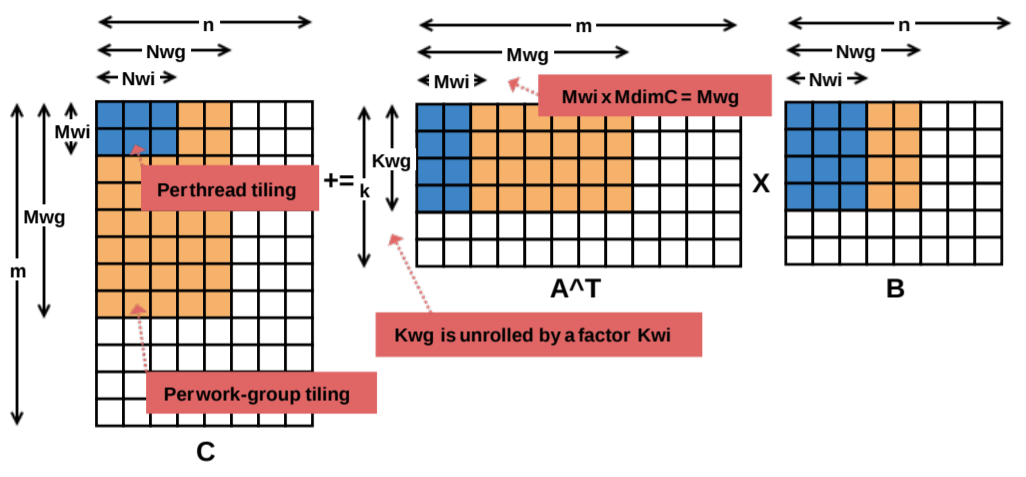

* Found best result 6.70 ms: 320.6 GFLOPS * Best parameters: GEMMK=1 KREG=4 KWG=1 KWI=1 MDIMA=16 MDIMC=16 MWG=64 NDIMB=4 NDIMC=4 NWG=32 PRECISION=32 SA=0 SB=0 STRM=0 STRN=0 VWM=4 VWN=4

under construction

"Intel Driver and Support Assistant" and "Intel System studio 2019" (intel-sw-tools-installation-bundle-win) was used.

https://github.com/intel/clGPU

https://github.com/intel/clGPU/tree/master/experimental/kernels

https://software.intel.com/en-us/iocl-opg-local-memory

https://www.phoronix.com/scan.php?page=news_item&px=Intel-Memory-Regions-Local-Dev

Subgroups

https://www.khronos.org/blog/vulkan-subgroup-tutorial

https://developer.nvidia.com/reading-between-threads-shader-intrinsics