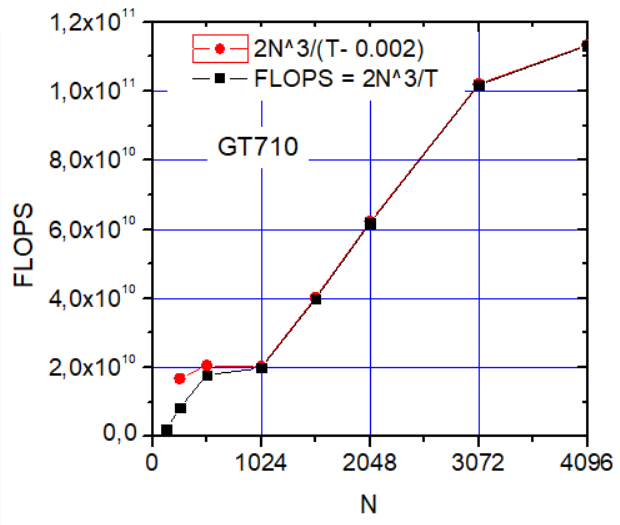

Tested on GeForce GT 710 (Windows 10, 64 bit)

(192 cores at 953MHz, peak performance 366 GFLOPS).

Results from SiSoftware Sandra OpenCL FP32 GPU test

|

GEMM

104 GFLOPS |

FFT

10.8 GFLOPS |

N Body

143 GFLOPS |

FMA (float multiply + add) is counted as 2 operations. Overheads in D3D11 backend depend on SSBO size therefore OpenGL backend is used for benchmarks.

|

SSBO TFjs RGBA32F RGBA16F |

N=1024

19.8 GFLOPS 9 GFLOPS 7.6 GFLOPS 10 GFLOPS |

N=2048

62 GFLOPS 22 GFLOPS 20.4 GFLOPS ~34 GFLOPS |

N=4096

113 GFLOPS 22.8 GFLOPS 23.6 GFLOPS ~45 GFLOPS |

HGEMM with HALF_FLOAT textures are almost x2 faster than SGEMM with FLOAT ones on GT 710. But it is likely that HGEMM and SGEMM are similar on AMD and Intel GPU. GEMMs tests on Google Pixel. But they say that TFjs uses HALF_FLOAT textures on mobile devices. Python + CUDA will be faster on desktop...

Unfortunately HALF_FLOAT SSBOs are not supported by WebGL2-compute

https://bugs.chromium.org/p/angleproject/issues/detail?id=3160.

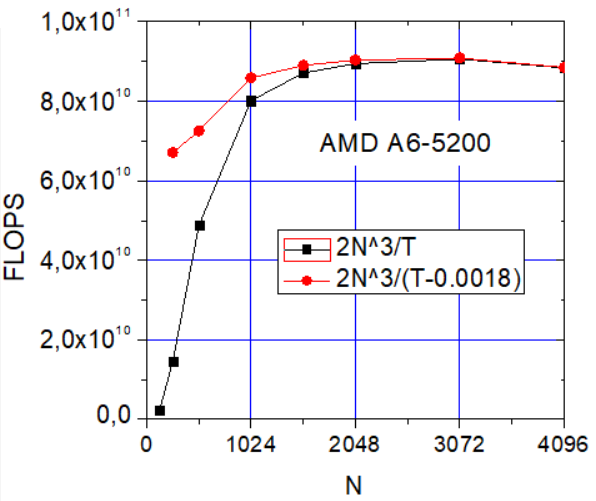

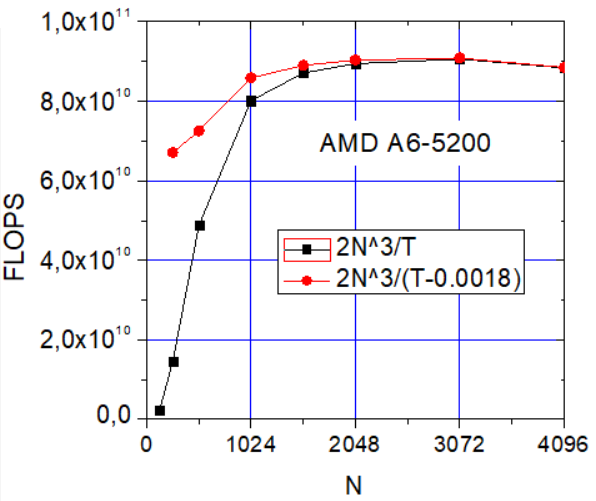

Surprisingly benchmarks on small AMD A6-5200 APU

and GT 710 are similar to Cedric's ones (Shaders 6,7 are ~10 times faster than Sh.1).

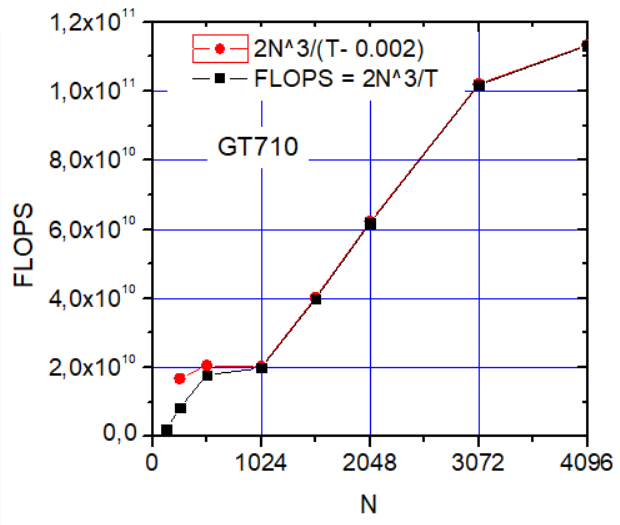

For some reason GT710 performance depends strongly on N.

To get "pure" performance overheads are subtracted below.

| IMHO even N = 2048 is too large for real ML applications. To accelerate smaller problems batched routines are used. In the simplest case we need just to multiply rectangular matrices (see an example to the right). |

|

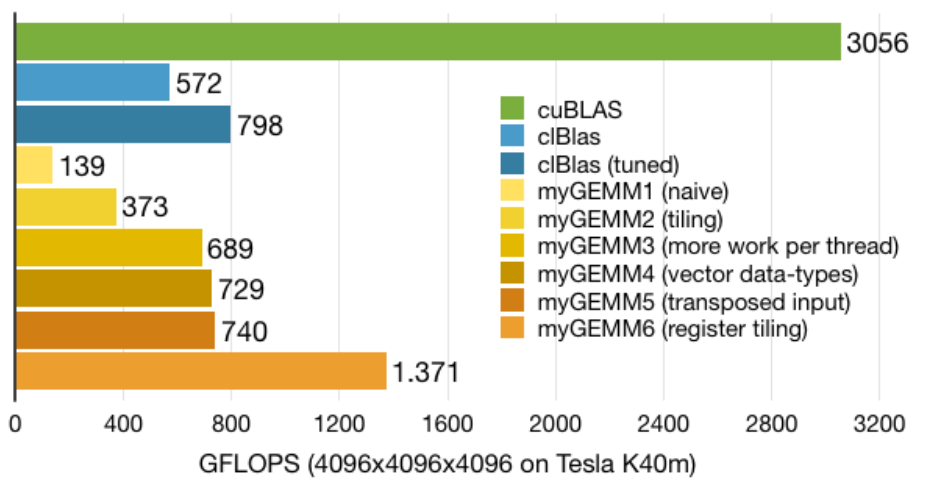

Tuning GEMM for Intel GPU

GEMM tests on RTX 2070